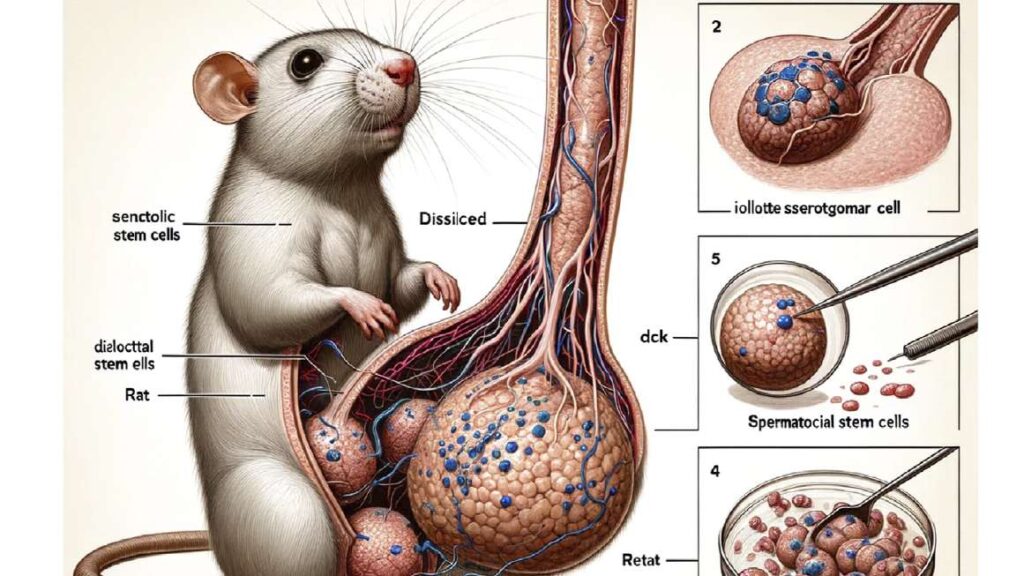

An illustration of a rat with a giant penis in cross-section has sparked fierce criticism of the use of generative artificial intelligence based on large language models (LLMs) in scientific publishing. The strange illustration is littered with meaningless labels, one of which is accidentally designated “dck.”This article about rat testicular stem cells was peer-reviewed and reviewed by the editor before publication in February Frontiers in Cell and Developmental Biology.

“Labor Day,” loudly Gary Marcus, a long-time artificial intelligence researcher and critic on X (formerly Twitter). He is exasperated by AI’s ability to fuel “the exponential enshitification of science,” which is suddenly polluted by LLM-generated content that is known to produce errors (‘illusions’) that sound reasonable but are sometimes difficult to detect. , this is serious, and its effects will be long-lasting.

An article from February 2024 nature Q: “Does ChatGPT make scientists more effective?” Well, maybe, but Thomas Lancaster, a computer scientist and academic integrity expert at Imperial College London, warns that some researchers working under “publish or perish” Artificial intelligence tools will be used covertly to churn out low-value research.

2023 nature A survey of 1,600 scientists found that nearly 30% use generative artificial intelligence tools to write manuscripts. Most cited the advantages of using AI tools, including faster processing of data and calculations, and overall saving scientists time and money. More than 30% believe artificial intelligence will help generate new hypotheses and discoveries. On the other hand, most worry that AI tools will lead to greater reliance on pattern recognition without causal understanding, entrench data biases, make fraud easier, and lead to irreproducible research.

Editorial September 2023 nature “We must not allow the coming flood of artificial intelligence information to fuel the proliferation of untrustworthy science,” warned the editorial. “If we lose trust in original scientific documents, we will lose the foundation of humanity’s common knowledge base,” the editorial added.

Nonetheless, I suspect that AI-generated articles are proliferating. Some can be easily identified by their careless and blatantly unacknowledged use of the LL.M. A recent article about liver surgery included the sentence: “I’m sorry, but I don’t have access to real-time information or patient-specific data because I am an AI language model.” Another was about lithium battery technology, with the standard Useful AI phrasing begins: “Of course, this is a possible introduction to your topic.” Another line about European fuel blending policy includes “As far as I know until 2021.” More savvy users will remove such traces of AI before submitting their manuscripts.

There are also some “torturous phrases” that strongly indicate that a thesis was essentially written in LL.M. A recent conference paper on statistical methods for detecting hate speech on social media suggested a number of methods, including “Head Component Analysis” instead of “Principal Component Analysis” and “Gullible Bayes” instead of “Naive Baye” Sisi”, and “irregular outlying areas” instead of “principal component analysis”. “Random forest”.

Researchers and scientific publishers are fully aware that they must adapt to the generative artificial intelligence tools that are rapidly being integrated into scientific research and academic writing.A recent article in british medical journal According to the report, 87 of the 100 top scientific journals are providing authors with guidance on using generative artificial intelligence. Nature and Science, for example, require authors to explicitly acknowledge and explain the use of generative AI in their studies and articles. Both prohibit peer reviewers from using artificial intelligence to evaluate manuscripts. Additionally, authors cannot cite AI as author, and both journals generally do not allow AI-generated images, so there is no mouse penis illustration.

Meanwhile, an article about a mouse penis has been retracted due to concerns raised about its artificial intelligence-generated illustrations, citing “it did not meet standards of editorial and scientific rigor.” Frontiers in Cell and Developmental Biology”.