In May 2023, OpenAI founder Sam Altman testified before the Senate Judiciary Committee about ChatGPT. Altman demonstrated how his company’s tools could drastically reduce the cost of retrieving, processing, transmitting and even modifying humanity’s collective knowledge stored in computer memories around the world. Users without special equipment or access can request research reports, stories, poems, or visual presentations and receive written responses within seconds.

Because of ChatGPT’s seemingly outsized power, Altman called for government regulation to “mitigate the risks of increasingly powerful AI systems” and suggested that U.S. or global leaders create an agency that would license AI systems with the power to “revoke that license.” Certify and ensure compliance with safety standards. Major artificial intelligence manufacturers around the world quickly responded to Ultraman’s clarion call of “I want to be supervised.”

Welcome to the brave new world of artificial intelligence and cozy crony capitalism, where industry players, interest groups and government agencies are constantly meeting to monitor and manage investor-owned companies.

Bootleggers and Baptists have a ‘print media moment’

According to Altman, ChatGPT has approximately 100 million weekly users worldwide. Some claim it launched the most successful consumer product in history, and Ultraman predicts even more users in the future. He is currently seeking U.S. government approval to raise billions of dollars from U.S., Middle Eastern and Asian investors to build a massive artificial intelligence chip manufacturing plant.

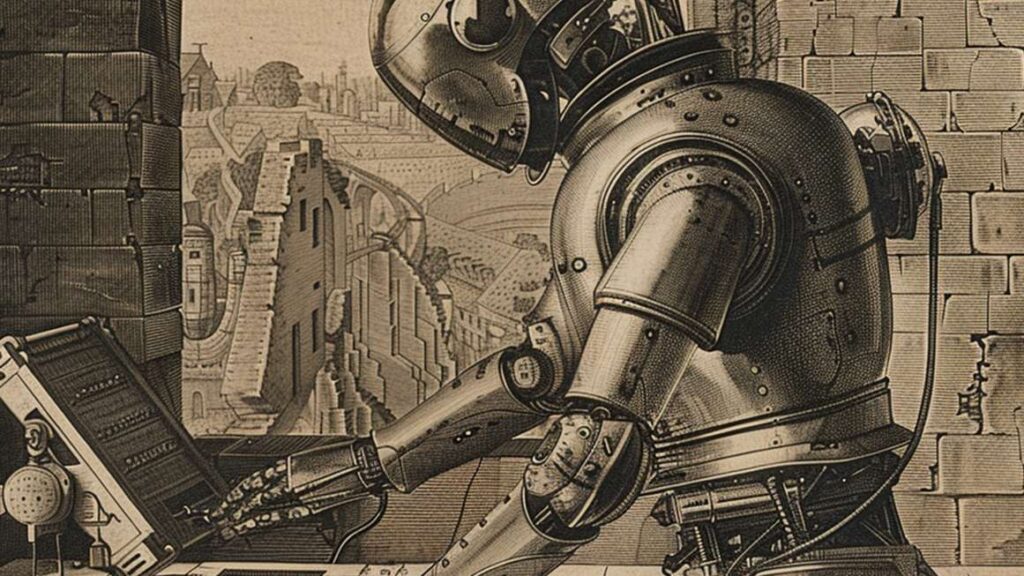

In his testimony, Altman referred to Johannes Gutenberg’s printing press, which launched the Enlightenment, revolutionized communication, and – following the motto “knowledge is power” – shook the world. The political and religious regimes of the world. Altman said the world is once again facing a “printing press moment”: another era of profound change that could bring immeasurable benefits to human well-being, as well as unimaginable disruptions. A related letter signed by Altman, other AI industry executives, and dozens of other leaders in the field underscores their deep concerns, saying: “Mitigating the extinction risk posed by AI should be matched by epidemics, Along with other societal-scale risks, nuclear war becomes a global priority.

Altman’s call for regulation had parallels in the early history of the printing industry. Queen Elizabeth I of England in the 16th century adopted licensing controls similar to those suggested by Altman. She assigned transferable printing rights to specific members of the printing guilds to regulate and censor printing, but the effort was thwarted.

Ultraman’s moral appeal lies in the idea of protecting a country’s people or its way of life. In this way, it satisfies the “Baptist” part of the smuggler and Baptist theory of regulation that I developed decades ago, which explains why calls to “regulate me” might be seen as just about business Make extra profits.

A particularly durable form of government regulation develops when it is supported by at least two interest groups, but for very different reasons. There are those who support pending regulations for widely held moral reasons (old-school Baptists, for example, want to ban legal Sunday alcohol sales). The other is for money (like smugglers who see an opportunity without any legitimate competition).

Ultraman’s “Baptist” altar call may well have been altruistic – who knows? After all, a government-protected AI market could provide a first-mover advantage because his entity helps determine the appropriate standards that apply to everyone else. It could produce a newer, more comfortable version of crony capitalism that never existed in quite the same form before.

Apparently some other would-be smugglers heard the altar call and liked the idea of joining a cozy cartel. Soon, Microsoft insisted that governments needed to thoroughly regulate artificial intelligence. In another Baptist-like appeal, Microsoft President Brad Smith said: “The permit system is fundamentally about ensuring a certain baseline of safety and capability. We have to prove we can drive before we can get a permit. If we drive recklessly, we can apply these same concepts, especially when it comes to safe uses of artificial intelligence.

Google is feeling the call, too, and issued a statement proposing AI regulation on a global and cross-agency basis. Google CEO Sundar Pichai emphasized that “artificial intelligence is too important not to be regulated, and too important not to be regulated well.” Another policy statement released by Google in February 2024 included a series of related Initiatives to collaborate with competitors and government agencies to advance safe and effective generative AI environments.

How will artificial intelligence regulate artificial intelligence?

In a December 2023 report on the activities of U.S. government agencies for fiscal year 2022, the Government Accountability Office said that 20 of the 23 agencies surveyed reported about 1,200 artificial intelligence activities, ranging from analyzing camera and radar data to Prepare for various activities such as planetary exploration.

In the past, regulatory incidents inspired by Bootleggers and Baptists often involved intensive studies and hearings, culminating in the creation of lasting rules. This was the case for much of the 20th century, when the Interstate Commerce Commission regulated prices, access, and services in specific industries, and in the 1970s the Environmental Protection Agency was equipped with command-and-control regulations that purportedly To control prices, entry and services in specific industries.

It sometimes takes years to enact regulations to address a specific environmental problem. By the time the final rule is published and frozen in time, the circumstances to be resolved may have fundamentally changed.

Cheap information brought by generative artificial intelligence changes all that. Through the use of artificial intelligence, generative AI regulatory processes—pending processes at California, federal, and other levels of government around the world—have so far facilitated an ongoing, never-ending governance process. These will focus on the major generative AI producers but involve collaborations between industry leaders, consumer and citizen groups, scientists, and government officials, all of whom participate in the emerging comfort of nepotism. Under the SMART Act, the Office of Artificial Intelligence will host an ongoing process to guide and influence the outcomes that generate artificial intelligence.

If more traditional command and control or utility regulation is used (which brings its own challenges), AI producers will be allowed to operate within a series of regulatory guardrails while responding to market incentives and opportunities. They don’t need to cooperate with regulators, their own competitors, and other so-called stakeholders in this larger enterprise. In this artificial intelligence world, smugglers and Baptists now sit openly together.

Specifically, this emerging approach to AI regulation requires the implementation of “sandboxes,” in which regulated parties work with regulators and advisors to explore new algorithms and other AI products. While sandboxes can offer something beneficial to an industry in a new or uncertain regulatory environment, members of the unusually collaborative AI industry are prepared to learn what competitors are developing under the watchful eye of government referees.

If this environment continues, the risk is that entirely new products and approaches in this area will never have a chance to be developed and reach consumers. In the new world of smugglers and baptists, the incentive to discover and profit from new AI products will be diminished by cartel behavior. Countries and companies that refuse to abide by these rules may become the only source of significant new AI developments.

The End of the Wild West of Artificial Intelligence

Regardless of which form of regulation dominates, generative AI is here to stay and isn’t going away. The need for computer power to process software has declined, while the application of generative artificial intelligence to address new information challenges has exploded. At the same time, the regulatory capabilities of government agencies are increasing, and so are the rewards for regulated persons to work hand in hand with government and organized interest groups.

The Wild West era of generative artificial intelligence may be coming to an end. At this point, invention, expansion, and growth can occur without regulatory restrictions while taking place within the confines of local courts, judges, property rights, and common law. New developments will still happen, but at a slower pace. The generative AI printing press moment may expand into an era. But just as Gutenberg’s invention initially led many major countries to regulate or even outlaw the printing press, new information technologies emerged anyway, making effective regulation of real knowledge impossible.

Despite official attempts to hinder the technology, the renaissance began. Along came the free printing press, and eventually the telegraph, the telephone, typewriters, mimeographs, photocopiers, fax machines, and the Internet itself. The flow of knowledge cannot be stopped. We moderns may once again realize that the human spirit cannot be suppressed forever, and in the long run, even if market competition is not encouraged, market competition will be allowed to bring mankind closer to a more prosperous era.

The Founding Fathers of the United States were enlightened and aware of the painstaking efforts in Europe to regulate Gutenberg’s printing press. They insist on free speech and a free press. We may eventually see similar intelligence applied to generate artificial intelligence controls—but don’t hold your breath.